Generative AI has been the talk of the business and technology world since the explosion of ChatGPT onto the market in late 2022. In Australia, there’s been a frantic whole-of-nation effort to understand the implications for business, government, workforces and communities.

IT professionals are at the centre of the storm. We find Australia balancing a combined potential AU $115 billion (US $74 billion) opportunity with significant risks, including data privacy and security. IT leaders are advised to educate stakeholders and be guided by business goals as they create processes for exploring and realising AI use cases.

Jump to:

- What are the potential benefits of AI for the Australian economy?

- What risks does generative AI bring for the Australian economy?

- Australian businesses are already embracing generative AI in some form

- What are businesses using generative AI for?

- Generative AI guidance has been provided to public sector agencies in Australia

- Australian Government is taking steps to ensure ethical use of generative AI

- What should IT leaders do to capitalise on generative AI?

What are the potential benefits of AI for the Australian economy?

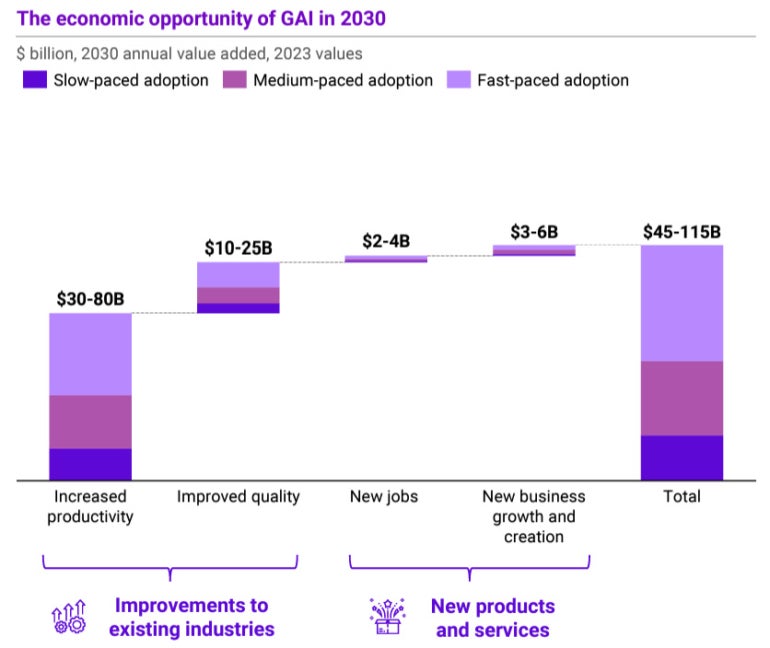

The Australian economy is well positioned to gain from generative AI technology. The Tech Council of Australia has predicted generative AI could deliver between AU $45 billion (US $28.9 billion) and AU $115 billion (US $74 billion) in value to the Australian economy by 2030.

In its Australia’s Generative AI Opportunity report in collaboration with Microsoft, it predicted:

- Up to AU $80 billion (US $51.5 billion) in value could come from increased productivity as workers use AI for some existing tasks to complete more work in less time.

- Additional value would come from the increased quality of work outputs as well as from creating new jobs and businesses — such as software exports — across the economy.

Healthcare, manufacturing, retail and financial services have been nominated as industries that could significantly benefit. Australia’s large existing tech talent pool, relatively high levels of cloud adoption and investments in digital infrastructure are expected to support AI’s growth (Figure A).

Figure A

What risks does generative AI bring for the Australian economy?

One of generative AI’s better-understood risks is workforce disruption, as it may require large numbers of employees to either learn new skills or retrain. In Generation AI: Ready or not, here we come! Deloitte claimed 26% of jobs already faced “significant and imminent” disruption:

- Administration and operations roles have been identified as most vulnerable to the new technology, while sales, IT, human resources and talent roles will be impacted in select industries.

- The industries facing higher levels of disruption in the shorter term include financial services, information and communication technologies and media, professional services, education and wholesale trade.

Putting existential risks aside, Australian Government research also named a number of “contextual and social risks” and “systemic social and economic risks,” ranging from the use of AI in high stakes contexts like health to the erosion of public discourse or more inequality.

What are the risks and challenges facing Australian business AI users?

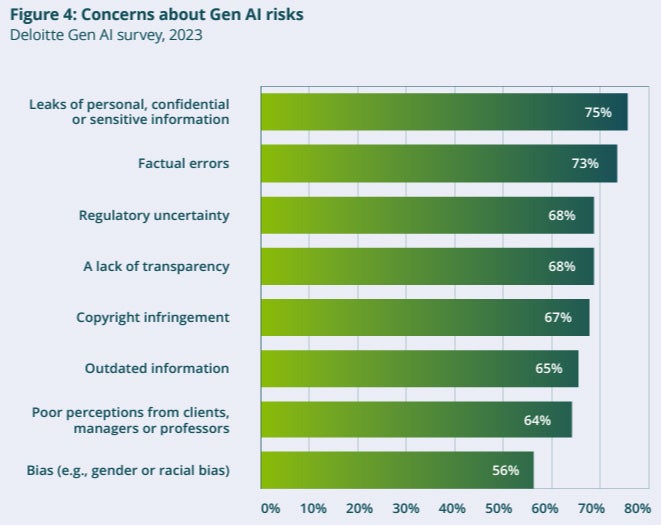

Australian business and IT leaders, as well as employees, agree the deployment of generative AI tools comes with significant risks. According to Deloitte’s survey, three quarters of respondents (75%) were concerned about leaks of personal, confidential or sensitive information, and a similar number (73%) were concerned about factual errors or hallucinations (Figure B). Other concerns included regulatory uncertainty, copyright infringement and racial or gender bias.

Figure B

The consensus seems to be that business approaches to the use of generative AI have been lagging behind adoption, leaving a “gap” that is introducing risks and that could hold businesses back from capitalising on opportunities. For example, Deloitte’s report found 70% of employers had yet to take action to prepare themselves and their employees for generative AI, while GetApp’s survey found only about half (52%) of employers had policies in place to govern their use.

Senior IT leaders have their own technical and ethical concerns with generative AI. A Salesforce survey of IT leaders found 79% had concerns about the creation of security risks and 73% with bias. Other concerns raised included:

- Generative AI would not integrate into the current tech stack (60%).

- Employees did not have the skills to leverage it successfully (66%).

- IT leaders had no unified data strategy (59%).

- Generative AI would increase the company’s carbon footprint (71%).

Australian businesses are already embracing generative AI in some form

Despite some of the concerns surrounding generative AI, businesses of all sizes have been enthusiastic experimenters with generative AI tools.

A recent Datacom survey of 318 business leaders in Australian companies with 200 or more employees found 72% of businesses are already utilising AI in some form. The survey also found the vast majority expected AI to bring significant changes to their organisation, with 86% of leaders believing AI integration will impact operations and workplace structures.

However, the formal adoption of generative AI has been more tentative in some larger businesses, as they experiment with the potential while weighing up or guarding against the risks. Of the businesses with over 200 employees Deloitte surveyed for Generation AI: Ready or not, here we come! only 9.5% had officially adopted AI in their businesses.

SEE: Boost your AI knowledge with our artificial intelligence cheat sheet.

Whether or not it is official, businesses are using AI organically through their employees. One survey found that two-thirds (67%) of Australian employees frequently use generative AI tools at work at least a few times a week. Another survey from software firm Salesforce found that 90% of employees were using AI tools, including 68% who were using generative AI tools.

Generative AI is expected to become a standard resource for businesses the more it is embedded into the products they use. In the marketing domain, for example, design software firm Adobe recently made its productised generative AI tool, Firefly, generally available, while competitor Canva has introduced image and text generation as well as translation within its products (Figure C).

Figure C

What are Australian businesses using generative AI for?

There have been an abundance of use cases identified for generative AI. Global research from McKinsey early this year explored 63 use cases across 16 business functions where the application of the tools can produce one or more measurable outcomes. However, much of the initial interest in generative AI in larger organisations is focused on the areas of marketing and sales, product and service development, service operations and software engineering.

In marketing and sales, top use cases include generating the first drafts of documents or presentations, personalising marketing and summarising documents. In product development, generative AI is used in identifying trends in customer needs, drafting technical documents and even generating new product designs. The potential to utilise it in customer service for chatbots is a popular use case, while its ability to write code is being explored in software development.

One of Australia’s largest banks, Commonwealth Bank, is a pioneering big business user of new generative AI technologies. In May, it was reported that the bank was already using it in call centres to answer complex questions by finding answers from 4,500 documents’ worth of bank policies in real time. Generative AI was also helping the bank’s 7,000 software engineers write code, improve its apps and create more tailored offerings for its customers.

Generative AI guidance has been provided to public sector agencies in Australia

Public sector agencies have been provided with high-level guidance. Prepared by the Digital Transformation Agency and the Department of Industry, Science and Resources, it suggests agencies only deploy AI responsibly in low-risk situations while keeping in mind known problems like inaccuracy, the nature and potential bias of training data, data privacy and security, and the importance of transparency and explainability in decision making.

Practically, the guidance suggested implementing an enrolment mechanism to register and approve staff user accounts to access AI — with appropriate approval processes through CISOs and CIOs — as well as establishing avenues for staff to report exceptions. It warned agencies off high-risk use cases, like coding outputs being used in government systems. It also suggested agencies move to commercial arrangements for AI solutions as soon as possible.

Australian Government is taking steps to ensure ethical use of generative AI

The Australian Government commissioned the production of a Generative AI Rapid Research Information Report in early 2023 to assess the opportunities and risks of generative AI models. This was followed by the release of a public discussion paper, Safe and Responsible AI In Australia, which invited submissions and feedback from businesses and the community.

The Government also committed AU $41.2 million (US $26.53 million) to support the responsible deployment of AI in the national economy as part of its 2023-24 Federal Budget. This included funding for the National Artificial Intelligence Centre to support the Responsible AI Network, a significant collaboration aimed at uplifting the practice of responsible AI across the commercial sector.

Aside from urgent recent action to ban AI-generated child abuse material in search engine results, the government has been working with stakeholders, including tech firms, to evaluate how to approach any AI regulation. Australia’s set of existing laws is expected to cover many possible AI scenarios, though gaps may exist that new regulation will need to fill.

What should IT leaders do to capitalise on generative AI?

Analysis from Gartner suggests the ongoing shift to digital in Australia will drive increasing investment in generative AI technologies in 2024, with a particular focus on tools for software development and code generation. However, Gartner also notes that generative AI and foundation models have reached the Peak of Inflated Expectations in Gartner’s 2023 Hype Cycle, which foreshadows a potential Trough of Disillusionment coming in the future.

At Gartner’s recent Symposium/Xpo on Australia’s Gold Coast, Distinguished VP Analyst Arun Chandrasekaran told IT leaders they were likely to encounter “…a host of trust, risk, security, privacy and ethical questions” with generative AI, and they would need to “…balance business value with risks.”

Chandrasekaran said leaders should consider creating a position paper outlining the benefits, risks, opportunities and deployment roadmap, as well as ensure strategy and use cases align with business goals, with clear assigned ownership and business metrics for measurement.

Chandrasekaran suggested IT create “tiger teams” that could work with business units on ideation, prototyping and demonstration of the value of generative AI. These teams could also be tasked with monitoring industry developments and sharing valuable lessons learned from pilots across the company.

However, Chandrasekaran warned IT would also need to foster responsible AI practices throughout to promote the ethical and safe use of generative AI. Employees should be prepared for this period of upheaval through skills retraining, career mapping and emotional support resources.